BY: Adelia Ibragimova

Introduction

Today, much of the effort in cyber-AI is aimed at automating everything possible. However, it is crucial to understand where this automation is heading. Currently, most initiatives and research in AI security are focused on red-teaming—building systems that simulate attacker behavior, automate vulnerability discovery, and enhance exploitation capabilities. These advances are undoubtedly valuable: they help test infrastructure resilience, refine response strategies, and train analysts. Yet when it comes to defense, this focus creates a strategic imbalance: we are becoming increasingly skilled at attacking but far slower at defending. The blue-team domain remains underdeveloped.

In this paper, I outline a set of best practices developed at EPAM that address this gap, showcasing practical approaches to applying AI within defensive security operations:

- Log Analysis: Using machine learning (ML) and large language models (LLMs) to detect and interpret anomalous behavior across massive telemetry datasets.

- Detection Rule Creation and Translation: Automating the conversion of detection logic between SIEM dialects (e.g., KQL, SPL, YARA‑L) to accelerate coverage expansion and reduce engineering overhead.

- Tier‑1 SOC Automation: Orchestrating AI agents that triage, enrich and route alerts autonomously, reducing analyst fatigue and improving response throughput.

These three focus areas—log analysis, rule translation and Tier‑1 SOC automation—were explored within EPAM’s applied research on AI‑driven security operations, with several of them implemented in the development of SOC‑enabled AI capabilities. Each domain reflects a specific pain point within modern SOC operations: data overload, tool fragmentation and operational inefficiency. Together they outline a roadmap for how AI can transform defense rather than merely simulate offense.

Log Analysis

For years, traditional Security Operations Centers (SOCs) relied primarily on statistical and signature‑based correlation to flag suspicious events. In the past few years, state‑of‑the‑art solutions started to deploy offline‑trained unsupervised and self‑supervised models that can detect anomalies across wide and heterogeneous telemetry streams. However, these models often yield high false‑positive rates when alerts are executed blindly in a production SOC environment, resulting in noise, analyst fatigue and diminished trust. As an alternative to false‑positive‑prone signatures or a catch‑all anomaly detection paradigm, we developed a high-level architecture of the hybrid machine‑learning pipeline combining structured anomaly detection with large‑language‑model interpretation layers. By blending the precision of ML‑generated signals with the narrative context and generalization of LLMs, we aimed to mitigate inherent noise while surfacing insights that mimic human‑level reasoning. This approach ensures alerts that not only pass statistical thresholds but also make semantic sense to the analysts.

To achieve both analytical precision and contextual understanding, we designed a hybrid machine‑learning pipeline combining generative AI for summarization of logs with classification and retrieval‑based capabilities. The generative layer surfaces a meaningful “story,” while the classification model extracts key entities and actions (similar to named‑entity recognition). The retrieval component cross‑references these events against a relevant knowledge base and threat intelligence to assign tags and severity. The models perform complementary functions: the generative output provides a narrative that contextualizes anomalies; the classification step ensures a structured representation; and the retrieval component links this structured output to authoritative threat intelligence sources, resulting in contextualized, prioritized detections.

Market Research Insights

In our research, we focused on infrastructure‑level and cloud telemetry logs (data sources such as API calls, account logins and database connections), since these are fundamental to core operational and security functions. Other types of telemetry (application logs, endpoint logs, etc.) share similar patterns, but the principles remain applicable. We found that the major cloud providers (Microsoft, Google, Amazon) offer powerful analytics solutions, often with proprietary LLM‑enabled tools. We specifically looked at Microsoft’s Azure AI Foundry: a comprehensive AI/ML suite integrated with existing Azure services. Foundry includes modules for AI engineering (pipelines, experiments, deployments), model management and an agent service for orchestrating multi‑agent processes. It offers a unified portal for data preparation, model training, evaluation and deployment, enabling rapid development and scalable production.

Most of the automations we researched and built were initially deployed on the Azure platform, which currently has the richest integration of AI and security tooling for enterprise customers. Azure AI Foundry includes an ML pipeline‑orchestration tool that can ingest logs, perform preprocessing and feature engineering, train detection models and expose the results through a graphical or API interface. It integrates with the Azure OpenAI service, allowing generative LLM‑based interpretation of anomalies and human‑readable alert descriptions for SOC analysts.

Within AI Foundry, anomalies detected in logs were enriched with MITRE ATT&CK mappings, asset context (owner, deployment environment, business impact) and recommended remediation actions. LLMs linked alerts to known tactics, techniques and procedures (TTPs), thus cross‑validating the machine‑learning signal. Analysts were presented not only with a red flag but with a coherent and actionable summary: the AI identified unusual API calls, clarified that this behavior could indicate credential stuffing, and outlined steps to investigate and mitigate. Generative summarization and reasoning allowed analysts to quickly assess both the severity and the context without manually reading lengthy logs.

The Agent Service tool framework includes built‑in modules for Deep Research, browser automation, Azure Functions (e.g., Python scripts, PowerShell) and knowledge‑graph traversal. Together these functions enable complex reasoning, classification and enrichment tasks to be performed as part of a log analysis pipeline or across multiple cooperating agents.

Connected agents (preview) enable complex tasks to be split across specialized subagents without custom code; we used this feature for heavy‑duty log processing (LLM summarization runs on one node; classification and retrieval tasks are distributed to another set of containers). This separation allowed us to handle heavy loads while keeping a tight feedback loop and resource efficiency.

Azure Logic Apps can be attached to agents through the portal, enabling low‑code workflow automation that triggers external alerts or executes remediation actions based on the AI’s classification and severity. For example, if the AI detects a privilege‑escalation anomaly on a critical cloud workload, Logic Apps can automatically revoke the session and notify the appropriate on‑call engineer. This bridging between AI detection and remediation significantly reduces time‑to‑containment.

At a high level, this research confirmed that MML‑based analysis of infrastructure logs—combining structured anomaly detection with an LLM interpreter—yields a lower false‑positive rate, improved interpretability and more actionable alerts than purely statistical or heuristic methods. The synergy between quantitative detection and qualitative summarization produced alerts that prioritized real threats and provided analysts with the context necessary for effective triage.

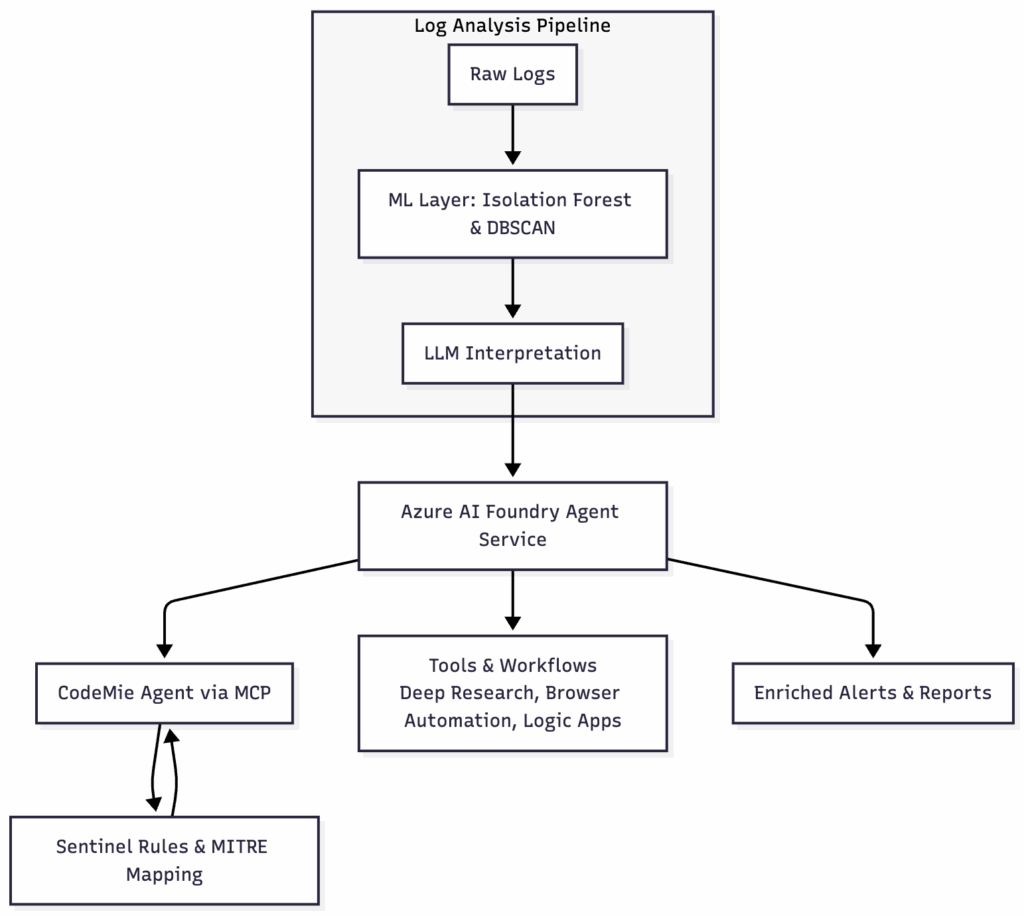

Schema 1. High‑level log‑analysis pipeline

This diagram shows how source logs (A) are passed through a machine‑learning layer (B) and interpreted by a large‑language‑model layer (C) to generate contextualized output (D). Unlike traditional pipeline diagrams, the agents (E) provide a modular and extensible way to orchestrate these steps in a cohesive workflow. Within Foundry, the agent uses a suite of tools (F)—including Deep Research, browser automation, Azure Functions and retrieval connectors—to enrich and contextualize the detections. This modular design allows the integration of proprietary or open‑source tools at each step, enhancing flexibility and adaptability to different environments.

2. Detection Rule Translation: From Static RAG to Agentic AI

One of the most impactful applications of AI within SOC automation lies in detection‑rule translation. Detection rules, often encoded in domain-specific languages like Splunk’s SPL, Google Chronicle’s YARA-L, and Microsoft’s KQL, define how threats are identified within telemetry. SOCs manage hundreds or even thousands of rules, with each new platform or SIEM (Security Information and Event Management) requiring its own syntax. As enterprises adopt multi‑cloud architectures, moving detection content across systems becomes a significant challenge, creating a lengthy translation and tuning cycle.

To address this, EPAM’s research introduced an agentic, RAG‑based Detection Rule Translator that integrates retrieval‑augmented generation (RAG) with an agentic framework. Across three enterprise migration waves (SPL→YARA‑L, SPL→KQL and KQL→YARA‑L; n ≈ 900 rules), the system achieved:

- ≈95 % syntax accuracy (up from a 75 % baseline).

- ≈89 % logic conformance (up from a 66 % baseline).

- A 15–20‑percentage‑point improvement in field‑mapping accuracy.

- A 40–60 % reduction in reviewer edit distance, cutting migration time from months to weeks.

Textual rationales explaining translation logic maintained high consistency (BERTScore ≈ 0.93; human evaluators found them convincing). This research demonstrates that an agentic, SOC‑oriented RAG pipeline can deliver reproducible, transparent and efficient rule migration on a large scale.

Tier‑1 SOC Automation

The Tier‑1 layer of a Security Operations Center remains the most labor‑intensive part of incident management, requiring real‑time triage, enrichment and routing. The research explored how AI can help by automating repetitive and time‑consuming tasks, enabling analysts to focus on complex cases.

Market Research Insights

However, very few solutions offer the deep reasoning, contextual awareness and multi‑agent coordination described here. Many existing tools rely largely on static playbooks and single‑model LLM pipelines. EPAM’s agentic framework stands apart because it integrates multiple reasoning modalities, retrieval connectors and feedback channels, producing a more flexible and robust solution.

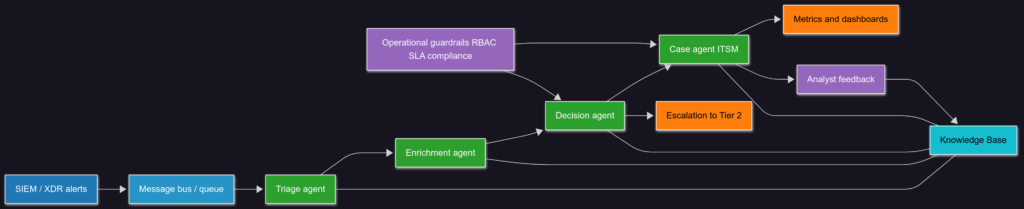

Schema 2” High-level SOC Tier-1 automation”

The diagram illustrates a multi‑agent framework designed to automate Tier‑1 operations. The agent coordinator dispatches newly ingested events to various specialized agents: the risk evaluation agent, case‑management agent and feedback agent. The evaluation agent performs risk scoring and determines whether escalation to Tier‑2 is required. The case agent (Tier‑1) creates incident cases, applies enrichment templates and prepares structured reports. The feedback agent monitors the actions taken and collects analyst feedback to refine the system.

All findings and analyst feedback are stored in a shared knowledge base, enabling continual learning and refinement. Feedback is closed through an Andon/Cord mechanism, ensuring that automated workflows can be halted or overridden by human analysts when uncertainty arises. We propose the following design principles for AI‑driven Tier‑1 automation:

- Fine‑tuned reasoning: enabling the system to weigh multiple risk factors and apply domain‑specific rules rather than generic heuristics.

- Andon/Cord control mechanisms: allowing analysts to stop or override an AI process when uncertainty is high.

- Feedback loops: ensuring that all actions and analyst decisions are logged and used to fine‑tune model performance.

- Explainable outputs: requiring every triage or escalation step to include a rationale and references to evidence sources.

Together, these mechanisms ensure that SOC automation evolves from rigid playbook execution toward a more adaptive and resilient system.

Key Limitations and Design Considerations

True Tier‑2 automation demands robust reasoning capabilities that are still emerging. The proposed framework addresses Tier‑1 and some Tier‑2 activities, but full Tier‑2 automation will require improved reasoning, explainability and agent coordination. Additional design factors include:

- Dedicated GPU resources for inference at scale.

- Data privacy and regional compliance controls (which differ across cloud providers).

- Human‑in‑the‑loop oversight and Andon/Cord mechanisms.

- Modular integration with existing SOC technologies.

Achieving a balance between automation and manual oversight is crucial to ensure trust and reliability.

References

1. Meier, R., Dijk, A., Melella, C., Vaarandi, R., Pihelgas, M. & Lenders, V. (2025). Next Steps in Cyber Defense: A Survey. IEEE Communications Surveys & Tutorials.

3.Chichurov, V. & Ibragimova, A. (2025, August 19). Lost in Translation? Leveraging AI to Simplify Migration of SOC Rules Across SIEM Systems. EPAM Website.

4.Meier, R., Lavrenovs, A., Heinäaro, K., Gambazzi, L. & Lenders, V. (2024). “Towards an AI‑powered player for flight data,” ACM Digital Library.

5.Microsoft. (2025, September 26). What is Azure AI Foundry Agent Service? Microsoft Learn. Retrieved October 2, 2025.